Data integration is no longer just moving information; it's the strategic engine for analytics, AI, and automation. Yet, many organizations struggle with brittle pipelines, poor data quality, and security gaps, stalling innovation. The cost of failed integration is high: flawed business intelligence, wasted AI investments, and missed growth opportunities.

This article provides 10 essential data integration best practices focused on tangible outcomes. We offer actionable strategies to build a robust framework, from proactive data governance to scalable ELT pipelines on platforms like Snowflake. You'll learn how to implement API-first design, master data management (MDM), and cloud-native architectures for maximum efficiency. Each practice is illustrated with real-world use cases, helping you build a resilient, secure, and observable integration ecosystem that turns your data infrastructure into a powerful engine for innovation and measurable business value.

1. Establish Data Governance and Quality Standards

Effective integration starts with a framework that defines data ownership, accountability, and quality. This isn't about rules; it's about creating a culture where integrated data is consistent, reliable, and trustworthy for analytics and operations. Strong governance prevents the classic "garbage in, garbage out" scenario, ensuring that every integration effort delivers value.

This approach ensures ingested data adheres to predefined quality metrics and business rules. The primary outcome is decision-ready data that fuels accurate AI models, reliable BI dashboards, and compliant reporting. For example, a global retailer's governance framework ensures product data consistency across all stores, enabling precise inventory management and a seamless customer experience. Similarly, a bank relies on strict governance to manage customer data across platforms, meeting GDPR requirements and avoiding hefty fines.

Actionable Implementation Steps

- Establish a Data Governance Committee: Form a cross-functional team (IT, legal, business units) to define policies and champion data stewardship.

- Start with Critical Data Domains: Prioritize high-impact domains like "Customer" or "Product" to demonstrate immediate business value.

- Implement Automated Data Quality Monitoring: Use tools to continuously monitor metrics like completeness, uniqueness, and timeliness, with alerts for anomalies.

- Document and Socialize Standards: Create a central repository for data definitions and policies to foster trust and prevent misinterpretation.

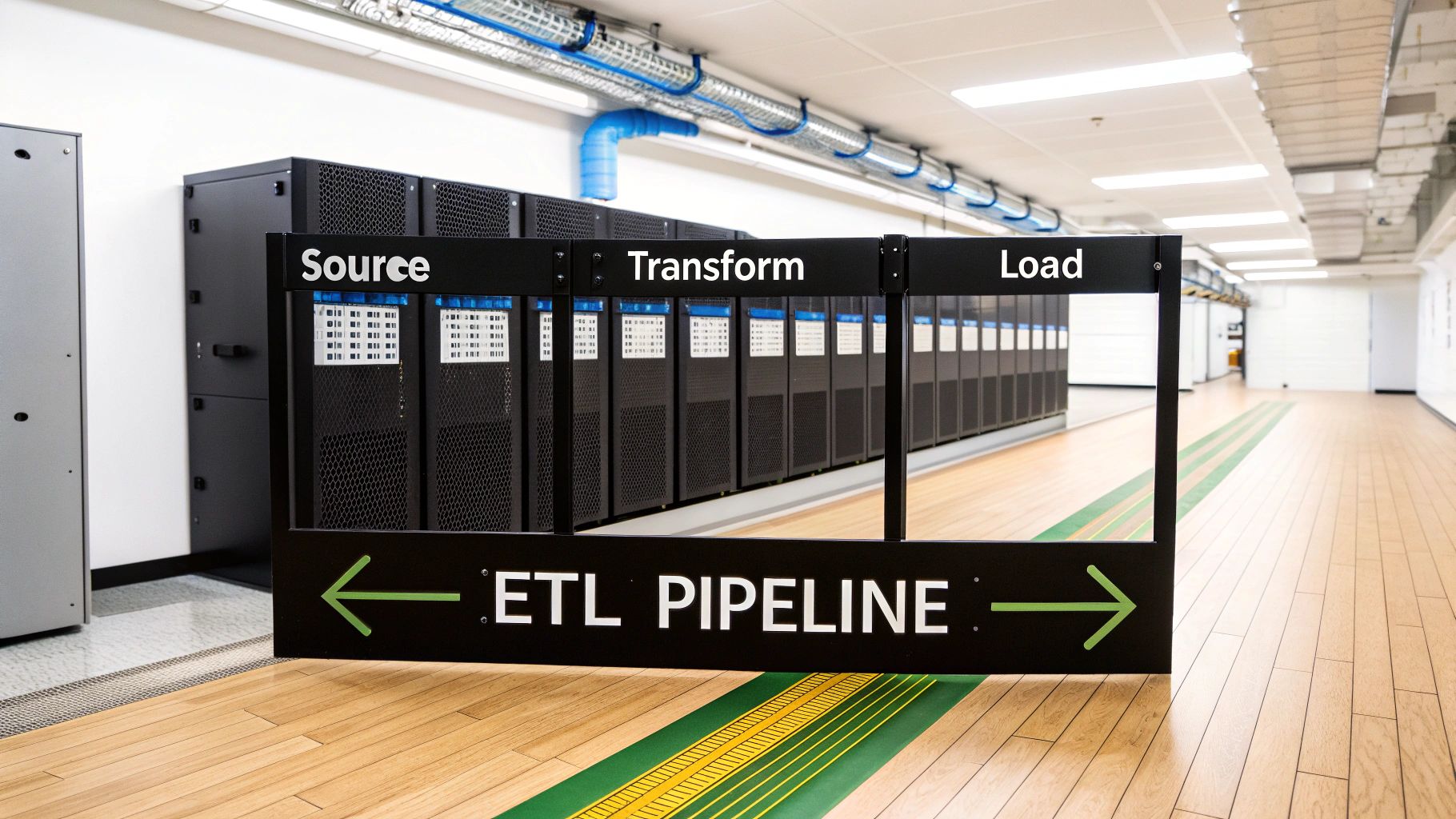

2. Architect Scalable ETL/ELT Pipelines

A well-designed pipeline defines how data moves from sources to a target like a data warehouse. While traditional ETL transforms data before loading, the modern ELT approach leverages the power of cloud platforms like Snowflake to perform transformations directly in the warehouse. This is a critical data integration best practice for achieving scalability and flexibility.

The ELT paradigm allows organizations to load raw data first, giving data scientists immediate access while transformations run in parallel. The primary outcome is accelerated time-to-insight and enhanced agility. A streaming service, for example, processes petabytes of data daily using ELT pipelines to personalize user recommendations in near real-time. This robust architecture ensures data is not just moved, but made readily available for high-value business outcomes.

Actionable Implementation Steps

- Prioritize Incremental Loading: Use Change Data Capture (CDC) to process only new or changed data, reducing compute costs and processing times.

- Design for Idempotency: Ensure that rerunning a pipeline produces the same result, preventing data duplication and making it resilient to failures.

- Containerize Pipeline Components: Use technologies like Docker and Kubernetes to ensure consistency across environments and simplify scaling. You can explore how to build a modern data platform with a Snowflake Partner.

- Implement Comprehensive Logging and Monitoring: Track KPIs like data volume, latency, and error rates to proactively identify and resolve issues.

3. Adopt an API-First Integration Approach

An API-first approach prioritizes real-time, bidirectional data exchange over traditional batch processing. Instead of building brittle point-to-point connections, this strategy establishes a network of reusable, well-documented APIs that serve as the contractual interface for all data interactions, creating a more agile and scalable ecosystem.

This method supports microservices and cloud-native applications, enabling systems to share data on demand. The key outcome is a decoupled and flexible architecture where systems can be updated or replaced independently without breaking the entire data flow. For instance, a payment processor’s API-first foundation allows businesses to integrate complex payment services with just a few lines of code. Similarly, an e-commerce platform’s extensive APIs empower developers to build apps that seamlessly extend its core functionality.

Actionable Implementation Steps

- Design APIs Before Implementation: Use tools like OpenAPI (Swagger) to define the API contract first, allowing teams to work in parallel.

- Utilize an API Gateway: Centralize functions like authentication, rate limiting, and logging to enhance security and manageability.

- Implement Robust Versioning: Include a versioning strategy (e.g.,

/v1/,/v2/) in your API URIs to introduce changes without disrupting existing integrations. - Create Comprehensive Documentation: Use interactive tools to help developers test endpoints directly, accelerating adoption and reducing support overhead.

4. Implement Master Data Management (MDM)

Master Data Management (MDM) establishes a single source of truth for critical data assets like customers, products, and suppliers. This practice consolidates, cleanses, and governs master data in a central system, ensuring it remains consistent and accurate across all applications. MDM directly solves the challenge of conflicting and duplicate information that derails analytics and operations.

By creating a "golden record" for core business entities, MDM provides a reliable foundation for all data-driven activities. The primary outcome is enterprise-wide data consistency, eliminating silos and supporting a unified view of the business. For example, a global CPG company can use MDM to create a single view of its customers, streamlining marketing and improving regulatory compliance. This is one of the most impactful data integration best practices for achieving operational excellence.

Actionable Implementation Steps

- Prioritize a High-Value Data Domain: Start with a critical domain like "Customer" to demonstrate immediate ROI and build momentum.

- Involve Business Stakeholders Early: Engage business leaders to define master data attributes and governance policies that meet real-world needs.

- Establish Clear Data Stewardship Roles: Assign ownership for each data domain to maintain quality, resolve conflicts, and enforce standards.

- Adopt a Phased Rollout Approach: Implement MDM incrementally, system by system, to minimize disruption and reduce implementation risk.

5. Leverage Cloud-Native Integration

A cloud-native approach means building integrations that fully leverage cloud capabilities like serverless functions, managed services, and auto-scaling. This creates resilient, elastic, and cost-efficient data pipelines by minimizing infrastructure management and maximizing operational agility. It's a critical data integration best practice for any modern enterprise building for scale and speed.

This methodology lets organizations pay only for consumed resources, automatically scaling to handle massive data volumes without manual intervention. The primary outcome is a highly efficient and responsive data architecture that adapts to fluctuating business demands. For instance, a social media company can use AWS Lambda for event-driven integrations, processing billions of events daily in a cost-effective, scalable manner. Similarly, a ride-sharing service can analyze real-time telemetry data to dynamically optimize logistics and pricing.

Actionable Implementation Steps

- Prioritize Managed Services: Use services like AWS Glue, Google Cloud Dataflow, or Azure Data Factory to handle infrastructure, patching, and scaling.

- Embrace Serverless Computing: Use functions-as-a-service (FaaS) for event-driven tasks like lightweight transformations or data validation.

- Leverage Containerization for Portability: Package applications with Docker and orchestrate them with Kubernetes for consistency and multi-cloud portability.

- Optimize for Cost from Day One: Implement cost management practices like tagging and choosing appropriate instance types to ensure financial sustainability.

6. Adopt Real-Time and Event-Driven Integration

Traditional batch processing is too slow for use cases that demand immediate action. A real-time, event-driven architecture processes and integrates data as events happen, using technologies like streaming platforms to decouple systems. This is a crucial data integration best practice for building responsive, scalable, and resilient data ecosystems.

This paradigm enables powerful outcomes like fraud detection, dynamic pricing, and personalized user experiences. For example, a professional networking site can leverage Apache Kafka to process billions of events daily, powering its real-time activity feeds. A food delivery service's event-driven model ensures that order updates and driver assignments occur instantaneously. The primary outcome is operational agility, allowing businesses to react to opportunities and threats in the moment. Learn more about how we harness time-series data with Snowflake to drive these capabilities.

Actionable Implementation Steps

- Define Clear and Versioned Event Schemas: Use a schema registry (like Avro or Protobuf) to enforce a strict contract for event data structures, preventing breaking changes.

- Implement Idempotent Consumers: Design consumer applications to handle duplicate messages without causing errors, guaranteeing data integrity.

- Utilize Dead-Letter Queues (DLQs): Automatically route messages that fail processing to a DLQ, preventing a single bad message from halting the entire pipeline.

- Monitor Consumer Lag and Throughput: Actively track key metrics like consumer lag to identify processing bottlenecks and maintain real-time performance.

7. Implement Data Lineage and Impact Analysis

Understanding where data comes from, how it changes, and where it goes is fundamental to trust. Data lineage provides a complete audit trail of the data's journey, from origin to final destination. This practice is essential for debugging pipelines, ensuring regulatory compliance, and performing impact analysis before making changes. It transforms your data ecosystem from a black box into a transparent, traceable asset.

This approach provides context, enabling teams to quickly troubleshoot issues and validate data accuracy. The primary outcome is enhanced trust and operational agility, as developers can confidently modify pipelines knowing the full impact. For example, a financial institution can use lineage to trace a figure in a regulatory report back to its source transaction, proving integrity to auditors. An e-commerce platform can see which dashboards would be affected by a schema change, preventing unexpected breakages.

Actionable Implementation Steps

- Automate Lineage Collection: Use modern integration tools that automatically parse SQL queries and ETL scripts to capture column-level lineage.

- Integrate with a Data Catalog: Push lineage metadata into a central data catalog to make it discoverable for both technical and business users.

- Visualize the Data Flow: Use tools to create clear, interactive diagrams that show the end-to-end flow of data, making it easier to identify root causes of issues.

- Use Lineage for Change Management: Before deploying changes, use the lineage graph to perform an impact assessment and proactively identify all downstream assets that will be affected.

8. Enforce Data Validation and Error Handling

Robust data validation and error handling are the active mechanisms that enforce data quality within your pipelines. This practice involves embedding checks directly into the data flow to catch, log, and manage anomalies before they corrupt downstream systems. By systematically validating data against predefined rules, you create a resilient process that prevents bad data from ever reaching its destination.

This proactive approach ensures that only clean, accurate information fuels your analytics and applications. The primary outcome is operational integrity, where business processes run smoothly on reliable data. For instance, a payment processor performs millions of real-time validations to ensure transaction data is correct, preventing failed payments. Healthcare systems validate incoming patient data against master records to prevent duplicates and ensure clinical accuracy, which is vital for patient safety.

Actionable Implementation Steps

- Implement Multi-Level Validation: Apply checks at ingestion (schema validation), during transformation (business rule validation), and before loading (referential integrity).

- Establish a Quarantine Zone: Move failed records to a dedicated "quarantine" area for analysis and reprocessing without halting the pipeline.

- Create Detailed and Actionable Error Logs: Log errors with rich context (error type, failed record, violated rule) for rapid troubleshooting.

- Automate Remediation Where Possible: Build automated logic to fix common, predictable errors (e.g., standardizing date formats), reducing manual intervention.

9. Maintain a Metadata and Data Catalog

Integration efforts are wasted if teams cannot find, understand, or trust available data. A data catalog addresses this by creating a centralized, searchable inventory of all data assets. This practice involves organizing metadata (data about data) to document everything from source and ownership to business context and quality scores, acting as a search engine for your enterprise data.

This allows analysts and data scientists to independently discover relevant datasets and assess their suitability for a task. The primary outcome is accelerated time-to-insight, as ambiguity around data is eliminated. For example, an open-source data catalog at a large tech company allows engineers to trace data flows from raw logs to dashboards, simplifying debugging. An internal catalog at another firm enables teams to find and reuse existing tables, preventing redundant integration work.

Actionable Implementation Steps

- Automate Metadata Ingestion: Use automated crawlers to scan databases and BI tools to extract technical metadata like schemas and column names.

- Enrich with Business Context: Empower data stewards to add business definitions and usage notes through a user-friendly interface.

- Visualize Data Lineage: Integrate tools that automatically map and visualize data lineage to improve transparency and aid in root cause analysis.

- Integrate with Analytics Tools: Ensure your catalog integrates with tools like SQL clients and BI platforms to allow data discovery within existing workflows.

10. Use Incremental Loading and Change Data Capture (CDC)

Moving beyond full-batch transfers is essential for efficiency. Incremental loading, powered by Change Data Capture (CDC), is a best practice that focuses on moving only the data that has changed. This method avoids the resource consumption of reloading entire datasets, drastically reducing network bandwidth, processing power, and latency.

This approach is transformative for use cases requiring fresh data, such as real-time inventory management or fraud detection. The primary outcome is reduced data latency and lower infrastructure costs. An e-commerce platform using CDC can stream order updates from its transactional database to a data warehouse in seconds, enabling immediate analysis of sales trends. Financial institutions use it to replicate transactions to fraud detection systems instantly, blocking suspicious activity before it causes damage.

Actionable Implementation Steps

- Choose the Right CDC Method: Evaluate source systems to select the best approach. Log-based CDC often has the least performance impact.

- Plan for Schema Evolution: Implement a process to automatically detect and handle schema drifts (e.g., new columns) to prevent pipeline failures.

- Implement Robust Monitoring and Alerting: Monitor for latency, data discrepancies, and source system connectivity, with alerts for any failures.

- Design for Fault Tolerance and Recovery: Ensure your pipeline can handle outages with features like dead-letter queues to guarantee data is reprocessed correctly after a failure.

Top 10 Data Integration Best Practices Comparison

ApproachImplementation Complexity 🔄Resources & Maintenance ⚡Expected Outcomes / Impact 📊Ideal Use Cases 💡Key Advantages ⭐Data Governance and Quality Standards🔄🔄🔄 (policy + org change)⚡⚡⚡ (people, tools, ongoing)📊⭐⭐⭐⭐ (consistent, compliant data)Regulated industries; enterprise-wide reportingEnsures accuracy, compliance, stakeholder trustETL/ELT Pipeline Architecture🔄🔄 (engineering design + ops)⚡⚡⚡ (compute, orchestration, monitoring)📊⭐⭐⭐⭐ (automated, scalable data availability)Large-volume batch/near-real-time ingestionAutomates flows, scalable, reduces manual workAPI-First Integration Approach🔄🔄 (design + security complexity)⚡⚡ (runtime ops, gateways, docs)📊⭐⭐⭐⭐ (real-time sync, flexible integrations)Microservices, external partners, real-time appsDecoupling, flexibility, easier updatesMaster Data Management (MDM)🔄🔄🔄🔄 (complex modeling & governance)⚡⚡⚡⚡ (central systems, stewardship)📊⭐⭐⭐⭐ (single source of truth)Customer/product/supplier consolidation at scaleEliminates duplicates, improves consistencyCloud-Native Integration🔄🔄 (architecture + cloud patterns)⚡⚡ (managed services, pay-as-you-go)📊⭐⭐⭐⭐ (fast deployment, auto-scaling)Elastic workloads, serverless-first initiativesReduced infra ops, better scalabilityReal-Time and Event-Driven Integration🔄🔄🔄 (stream design, ordering, semantics)⚡⚡⚡ (streaming infra, monitoring)📊⭐⭐⭐⭐ (immediate availability, reactive UX)Low-latency needs, streaming analytics, orders/eventsEnables real-time analytics and decouplingData Lineage and Impact Analysis🔄🔄🔄 (metadata capture + mapping)⚡⚡ (catalog tools, updates)📊⭐⭐⭐ (traceability, faster troubleshooting)Change management, compliance, ETL auditsReveals provenance and change impactData Validation and Error Handling🔄🔄 (rules + remediation flows)⚡⚡ (validation engines, DLQs)📊⭐⭐⭐⭐ (higher data reliability)Critical pipelines, finance/healthcare data flowsPrevents bad data propagation; faster fixesMetadata Management and Cataloging🔄🔄🔄 (inventorying + governance)⚡⚡⚡ (catalog tools, curation)📊⭐⭐⭐ (better discovery, faster insights)Large data estates, self-service analyticsImproves findability and governanceIncremental Loading & Change Data Capture (CDC)🔄🔄🔄 (source support + edge cases)⚡⚡ (low bandwidth, monitoring)📊⭐⭐⭐⭐ (efficient sync, near real-time)High-volume DB syncs, low-latency replicationReduces transfer cost; enables timely syncsData Lineage and Impact Analysis🔄🔄🔄 (metadata capture + mapping)⚡⚡ (catalog tools, updates)📊⭐⭐⭐ (traceability, faster troubleshooting)Change management, compliance, ETL auditsReveals provenance and change impactIncremental Loading and Change Data Capture (CDC)🔄🔄🔄 (source support + edge cases)⚡⚡ (low bandwidth, monitoring)📊⭐⭐⭐⭐ (efficient sync, near real-time)High-volume DB syncs, low-latency replicationReduces transfer cost; enables timely syncs

Building Your Future-Proof Data Integration Strategy

Navigating data integration is a core strategic imperative. We've explored the pillars of a robust framework, from foundational Data Governance and MDM to advanced patterns like API-First Design, Cloud-Native Integration, and Event-Driven Architectures. We've covered building efficient pipelines with ELT best practices and maintaining integrity through Validation, Error Handling, and CDC. The goal is not just to connect systems, but to build a resilient, scalable, and intelligent data ecosystem.

This journey transforms data from a siloed resource into a dynamic, enterprise-wide asset that directly powers business outcomes. For a logistics company, real-time integration means optimizing fleet routes based on live traffic data to slash fuel costs. For a telecom firm, a well-governed data platform enables predictive maintenance, preventing outages. A mature data integration strategy is the engine for operational excellence and innovation.

From Theory to Tangible Value: Your Next Steps

Adopting these practices is an ongoing commitment that requires a cultural shift. To translate these concepts into action, consider these steps:

- Conduct a Capability Audit: Assess your current architecture against these practices. Where are the biggest gaps? Are you relying on outdated batch processes where real-time streams would deliver more value?

- Prioritize a Pilot Project: Select a high-impact, low-risk business area to pilot a modern integration pattern, such as implementing a CDC pipeline for a critical operational system. A successful pilot builds momentum and demonstrates tangible ROI.

- Invest in Metadata and Observability: Implement a Data Catalog and establish clear Data Lineage. These tools empower developers to build faster and analysts to trust the data they use, accelerating the entire analytics lifecycle.

The Ultimate Goal: A Cohesive and Agile Data Fabric

Ultimately, these practices lead to a cohesive data fabric—an intelligent layer that abstracts away the complexity of underlying data sources. This fabric ensures that high-quality, trusted data is readily available to any application, analytics platform, or AI model that needs it.

This is the future of enterprise data management: a state where integration is an enabler of agility, not a bottleneck. It’s about creating an environment where a new AI-powered automation can be deployed in days, not months, because the underlying data is already accessible, governed, and reliable. As you move forward, remember that each best practice you implement is a deliberate step toward transforming your organization into a truly data-driven powerhouse.